⚠️ Warning: This documentation refers to the proof-of-concept implementation of Wildland client (opens new window) which is no longer maintained. We are currently working on a new Wildland client written in Rust. To learn more about Wildland and the current status of its development, please visit the Wildland.io webpage (opens new window).

# A Practical Introduction to Wildland

Wildland is a collection of protocols, conventions, software, and (soon) a marketplace for leasing storage and in the future compute infrastructure. All these pieces work together with one goal in mind: to decouple the user's data from the underlying infrastructure.

This introductory guide is intended as a one-stop, short, hands-on introduction to the conceptcs behid Wildland and its basic usage. A more detailed introduction can be found in our Why What How paper (opens new window).

We start with explaining a few fundamental concepts behind Wildland below. Please read them.

# Containers

The fundamental concept in Wildland is that of a data container. Wildland containers are like Docker containers, except that Wildland is primarily concerned with data, rather than with code.

Implementation-wise, containers are like directories. They can be mounted within the filesystem and are then exposed as regular filesystem directories.

Each container can have a title and one or more categories, which are used to determine FS paths under which the container can be found, e.g.:

title: Trip to Antarctica

categories:

- /data/type/photos

- /events/holidays

- /places/Antarctica

- /timeline/2022/02/30

paths:

- /.uuid/60a26b55-b880-4267-84d2-817e3636ba45

To create a container like the one above you should use the wl container create command:

$ wl container create \

--title "Trip to Antarctica" \

--category /data/type/photos \

--category /events/holidays \

--category /places/Antarctica \

--category /timeline/2022/02/30 \

--template mydropbox

The last --template argument indicates which storage should be selected for the hosting of the container -- we'll discuss these below.

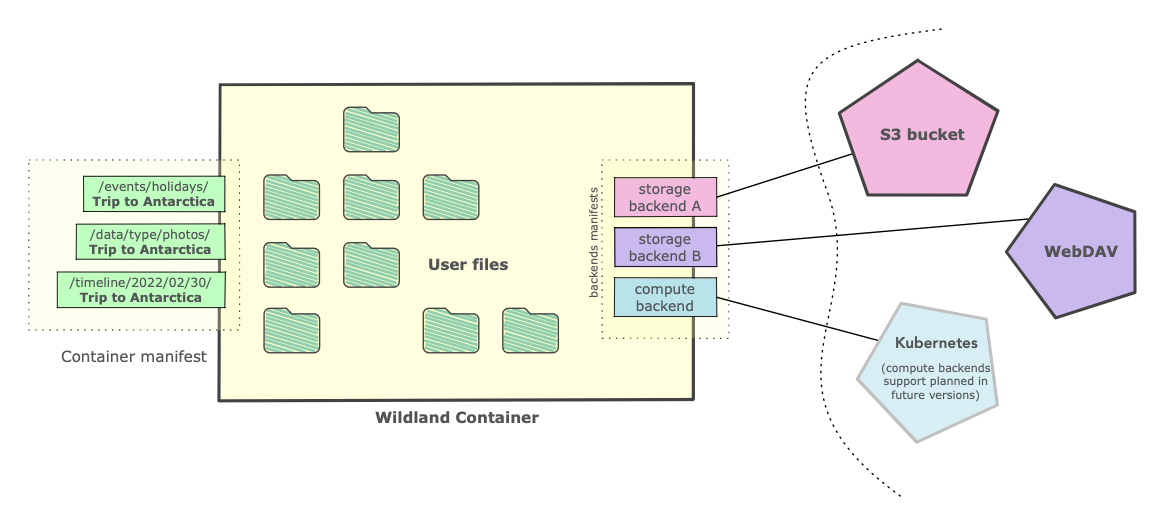

The snippet above is part of what we call a container manifest, which is a YAML file containing several crucial pieces of information, such as where the container is stored (on which infrastructure), how the container is to be addressed (i.e. its title, categories, and paths, as shown above), the container owner id (discussed below) and the digital signature made with this owner's key. Every manifest in Wildland is always digitally signed, making storage on untrusted infrastructure possible.

The container can be addressed using either of the categories concatenated with the title, or using one of the paths (this time without titles appended), e.g.:

/data/type/photos/Trip to Antarctica/

/events/holidays/Trip to Antarctica/

/timeline/2022/02/30/Trip to Antarctica/

/.uuid/60a26b55-b880-4267-84d2-817e3636ba45

The paths mentioned above would typically be mapped within the user filesystem. The reference Linux client uses the ~/wildland mount point by default (this means all the above-mentioned paths would be relative to ~/wildland).

# Multi-categorization

There might be more than one container claiming the same path, in which case their content is merged and displayed under the same common path. Consider e.g. another container from the same trip to Antarctica, but this time for movies, not photos (note that this one claims the category /data/type/movies instead of /data/type/photos):

title: Trip to Antarctica

categories:

- /data/type/movies

- /events/holidays

- /places/Antarctica

- /timeline/2022/02/30

paths:

- /.uuid/2dd2004d-b6bc-4b56-8f2f-e797c90485a2

If the user navigated to ~/wildland/timeline/2022/02/30/Trip to Antarctica/, she would see the content of the files from both containers. But if accessed via any of the unique paths, such as e.g. ~/wildland/data/type/movies/Trip to Antarctica/, or the UUID path (/.uuid/...), then she would see only the files from the latter.

We call the ability for these containers to claim more than one path multi-categorization and consider it an important feature which allows for using the file systems as a powerful information/knowledge organization tool. There is more to this fascinating topic, which will be discussed in a separate article.

# Users and keys

In Wildland, each container is always owned by a specific user. Each user corresponds to a cryptographic key pair and is identified by a hash of its public key, such as:

0xb26e638663d445c1720168fffde90270157b7561f38f08cbbae9097c44c53fc9

To create a new user you can use the following command:

$ wl user create yatima

You can list and manage users via various sub-commands of wl user, e.g. wl u ls.

This is analogous to blockchain accounts. There are more similarities between Wildland and various blockchain concepts, but it should be noted that Wildland is not a blockchain, and it's not dependent on any blockchain technology either. However, the upcoming marketplace reference implementation, which we intend to release and deploy soon, will use Ethereum.

Just like containers, each user is also represented by a user manifest YAML file, which contains its full public key, and which is also digitally self-signed with this key.

It is possible for one group user id to represent a set of several public keys. This allows for more advanced settings, where a single forest is de-facto owned by several users. More details can be found here.

# Container addressing

Container addresses comprise two parts: the owner, and one of the paths which the container claims for itself:

wildland:<userid>:<container or bridge path>:

The wildland: prefix is a standard URI schema selector (opens new window).

For example -- for the container from the first example above:

wildland:0xcd5a...79:/timeline/2022/02/30/Trip to Antarctica/:

This syntax is recursive when instead of a container we use a bridge, as discussed below.

# Forests

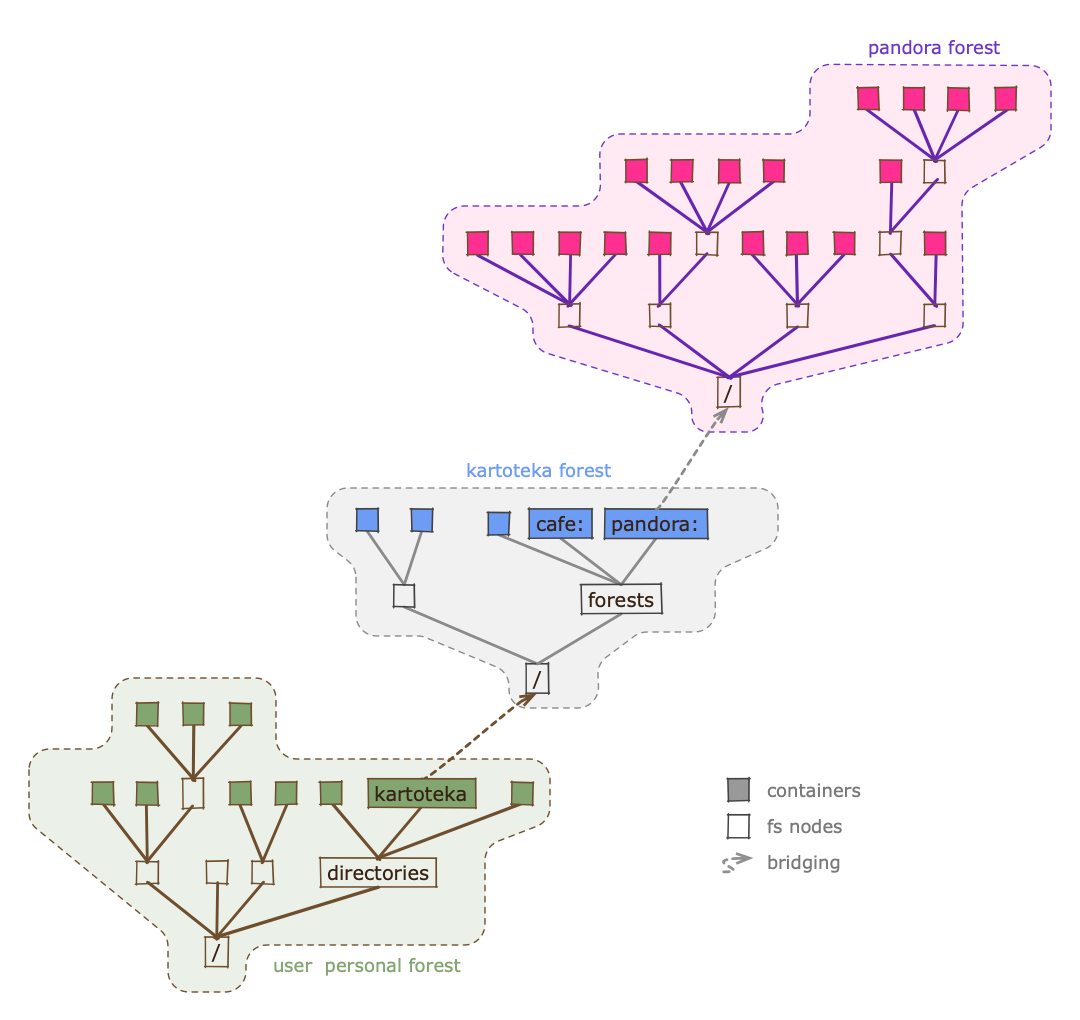

All containers owned by a particular user put together constitute a namespace. We call this namespace a forest.

There is a 1-1 mapping between users and forests. This means that we can think of the user id (in the container address) as a forest id, and vice versa.

You would typically mount the whole forest of a particular user and navigate it like a normal filesystem, using your preffered file manager. This can be easily done using the wl forest mount command, as discussed in the Quick Start Guide.

The current Linux client reference implementation uses FUSE to construct the filesystem on-the-fly, allowing for the mounting of it like any other filesystem on Linux. Additionally, we provide a handy Docker packaging for the client, allowing the user to run the Linux client on any OS. This Docker exposes Wildland filesystems using the standard WebDAV protocol, which can then be mounted using most popular file system browsers.

In the practical bit, we show how to create a simple single-user forest and mount it.

# Bridges to other forests

Wildland allows cascading navigation between forests. This gives the possibility to create "directories" which contain links to other forests. These links are a special kind of containers, which we call bridges.

The three important pieces of information every bridge provides is:

The public key of the forest which it leads to,

The address of the user manifest for this target forest (which in turn contains all the information required to mount such a forest),

The path(s) claimed by the bridge within the (source) forest, placing it at a particular location within the filesystem representing the directory forest.

Additionally, each bridge, like any other container, has an owner (who we can consider the directory's admin) who signs its manifest, otherwise they would not be present within the directory forest filesystem. This way, even if a directory is kept on an untrusted infrastructure, the integrity of the bridges is maintained by the digital signatures of the owner.

Of course it is up to the directory forest owner to decide if a bridge should be published within their forest or not and under what paths. Wildland does not, and cannot, enforce any policy with regard to how directories could or should be constructed and maintained.

# Cascading Addressing

Bridges allow what we call cascading addressing, which in turn allows others to navigate between forests in different ways.

Consider the following path:

wildland:@default:/mydirs/kartoteka:/forests/pandora:/docs/Container Addressing:/addressing.pdf

It comprises two bridge addresses, plus a final container address, i.e.:

The first bridge placed at

/mydirs/kartoteka:within the user's default forest (see below),The second bridge, placed at

/forests/pandora:within thekartotekaforest,Finally, the

/docs/Container Addressingis the address of the container within thepandoraforest, and/addressing.pdfis the specific file within that container.

This means that Wildland is a bit like the Internet, except it doesn't rely on any centralized directories, like the DNS system (which is centralized because of the fixed, well known pool of the root DNS servers). Anyone can create a directory, distribute its address to a group of friends or a local community and thus bootstrap a small network of forests. It can later grow in width -- by including more bridges to other user forests -- and in depth -- by allowing to reach an increasing number of forests indirectly through the forests to which it contains bridges. We like to think about this process as one resembling the bottom-up growth encountered e.g. in the world of plants.

# User's default forests and "root" directories

The resolution of cascading addresses needs to start somewhere. It starts with what we call the user's default forest, which typically corresponds to the user's own personal forest.

The user's personal forest typically contains bridges to other forests, some of them functioning as de-facto directories (e.g. the /mydirs/kartoteka: in the example above is one such bridge).

Upon installing Wildland client, you need to create at least one user, thus effectively creating a new forest namespace (for more see the forest creating section in the Quick Start Guide). Typically the user would then import one or more other forests, perhaps some well known forests used by a local community, with the intent to use them as directories in order to find even more forests. This process effectively creates what traditional DNS would call "root name servers", except there are no root servers in Wildland.

The following command can be used to import a forest's user manifest and automatically create a bridge named /mydirs/some-forest: (note the trailing : at the end of each bridge -- this colon character is enforced by the client at the end of any bridge):

$ wl u import --path /mydirs/some-forest some-forest.user.yaml

How the user gets this manifest of a remote forest is left up to them. The manifest, which typically is a very small file of around 100 bytes, can be sent by email, downloaded from the Web, placed on some blockchain, or brought by a Postal Pigeon.

While we plan to setup and run a public directory at some point (and likely make it available both via the Web and one or more blockchain networks), it is important to stress that Wildland makes it very easy to import many forest manifests of this kind, perhaps a different one for each sphere of a person activities: one for work-related matters, another for personal activities, and another for some local-based community.

# Correspondence between wildland: addresses and filesystem paths

As a side note, please observe that any Wildland container address URL, such as e.g.:

wildland:@default:/mydirs/kartoteka:/forests/pandora:/docs/Container Addressing:/addressing.pdf

corresponds almost exactly to the FS-mapped path to that container, which in the case of the example above would be:

~/wildland/mydirs/kartoteka:/forests/pandora:/docs/Container Addressing:/addressing.pdf

We consider this URL-FS correspondence to be quite elegant.

# Storage

Each container can have one or more storage backends assigned to it, each defined by what we call a storage manifest. Assigning more than one storage to a container allows for redundancy. The reference Wildland client uses is the first backend which it managed to successfully mount.

A storage manifest might point to an S3 bucket, a WebDAV server, a Dropbox or Google Drive folder, and even to more "exotic" types of storage such as an IMAP4 mailbox and more. To support different types of storage-access protocols, our reference implementation Wildland client implements a pluggable architecture. Current plugins can be examined here.

# Storage backend templates

In practical terms, it is convenient to use what we call storage backend templates, which are Jinja-based small YAML files containing all the details necessary to access a given storage resource, including any URLs, credentials, etc. It's easy to create these with the help of a command line, e.g.:

$ wl template create webdav mywebdav \

--login <USERNAME> \

--password <PASSWORD> \

--url <WEBDAV_SERVER_URL>

$ wl template create dropbox cafe-dropbox --token <YOUR_DROPBOX_ACCESS_TOKEN>

The exact parameters used to define a given template depend on the type of the protocol/plugin used and are documented within each of the plugins.

Once a storage template is defined, it is possible to proceed to container- and forest-creating commands and have Wildland client generate specific storage manifests.

# Upcoming marketplace for leasing of backends

We're currently working towards creating a marketplace from which the client software will be able to automatically obtain storage templates to host the user's containers. This feature will be announced separately.

# Upcoming Compute backends

Further into the future we also plan to introduce compute backends which would allow containers to be dynamic and to gather and process information. This topic is discussed in more detail in the W2H paper (opens new window).

# Access control

Wildland offers several levels of access control, which can be used for fine-grained control of sharing.

# Automatic manifest encryption

Each container- and storage-defining manifest is automatically encrypted to the public key of its owner. If more users should be able to read the manifest, e.g. to be able to mount the container or the whole forest, container-, storage-, and forest- creating or modifying commands take the special argument --access, which makes it possible to provide a list of user ids to which the manifest should be additionally encrypted.

For example, the following command will create a storage template which will be generating storage manifests encrypted to the two additional user ids:

$ wl template create s3 my-aws-bucket \

--access-key <ACCESS_KEY_RO> --secret-key <SECRET_KEY_RO> \

--s3-url s3://my-bucket-id \

--access 0xcd5ad6ed64f28561134818db641d475dbcf43fff7a4517c9cdb1658901842579 \

--access 0xb26e638663d445c1720168fffde90270157b7561f38f08cbbae9097c44c53fc9

# Container content encryption

Container content encryption is distinct from manifest encryption and requires a dedicated backend which sits on top of the FS exposed by the "bare" infrastructure (such as the S3 bucket or WebDAV server), and which seamlessly performs encryption/decryption of file contents and path names. This encryption backend can be used in the same way as any other backend when defining storage for a particular container or forest. More details on the use of this backend can be found here.

# Infrastructure-level ACL

Finally, access to containers can be controlled by the underlying infrastructure. A WebDAV server would typically require some form of user/password authentication and perhaps, depending on the user, offer read-write or read-only mode. An AWS S3-based storage would enforce IAM user-specific policy when accessing a particular bucket. Other types of storage might implement other access control.

Wildland, in general, is agnostic in regards to these infrastructure-specific mechanisms, but still allows making use of these mechanisms, thanks to its expressive storage manifest syntax. More on this topic can be found here.